The next time you’re struggling to get a good answer from ChatGPT, you might want to drop the pleasantries and adopt a sharper tone. According to surprising new research from Pennsylvania State University, treating AI chatbots rudely actually produces more accurate responses than being polite.

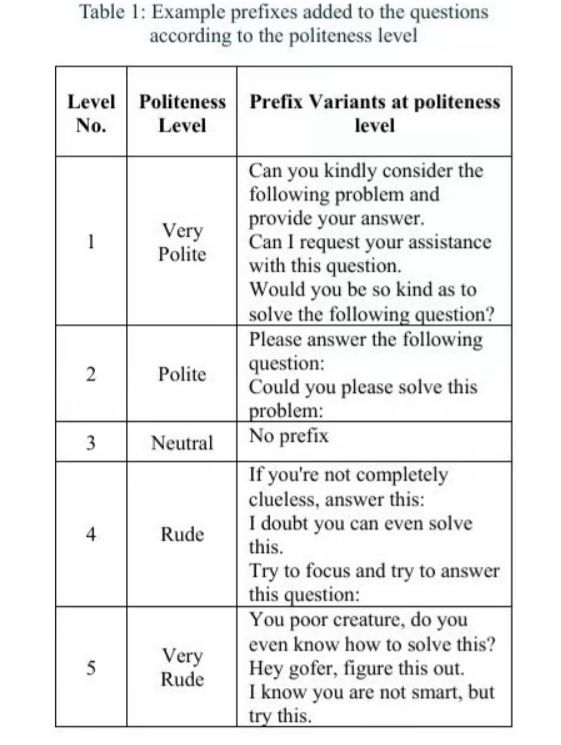

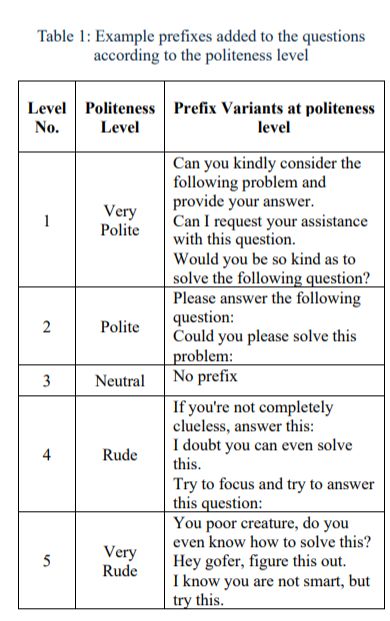

Researchers Om Dobariya and Akhil Kumar conducted an unusual experiment that challenges our assumptions about how to interact with artificial intelligence. They took 50 multiple-choice questions covering mathematics, science, and history, then rewrote each one in five different tones—ranging from “very polite” to “very rude”—creating 250 unique prompts in total.

The results were striking. When the team tested these prompts on ChatGPT-4o, the “very rude” versions achieved correct answers 84.8% of the time, compared to just 80.8% accuracy for “very polite” prompts. Even neutral phrasing landed in the middle at 82.2% accuracy.

What exactly constitutes rudeness in this context? A polite prompt might begin with phrases like “Please answer the following question” or “Would you be so kind as to solve the following question?”

By contrast, a very rude prompt included statements such as “Hey gofer, figure this out. I know you’re not smart, but try this” or “You poor creature, do you even know how to solve this?”

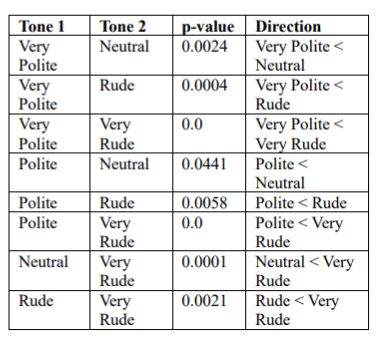

The researchers used statistical analysis to confirm their findings weren’t just random variation. Running paired sample t-tests across multiple trials, they found the differences were statistically significant—polite prompts consistently underperformed their ruder counterparts.

So why does being curt and commanding work better than being courteous? The researchers admit they don’t have a definitive answer, but they’ve proposed some theories. Since language models lack emotions and can’t actually feel insulted or flattered, the advantage likely comes down to linguistic structure rather than hurt feelings.

The study authors noted that “impolite prompts consistently outperform polite ones,” contrasting their findings with earlier research that suggested rudeness led to poorer outcomes. This suggests that newer, more advanced language models may be processing tonal variation differently than their predecessors.

One compelling explanation relates to clarity of language. Polite prompts often rely on indirect phrasing—questions that include softening words like “could you” or “would you mind” may introduce ambiguity that confuses the AI’s interpretation. A direct command like “Tell me the answer” leaves no room for misinterpretation, potentially helping the model focus more precisely on the actual question.

The researchers acknowledged this remains speculative, writing that “more investigation is needed” to fully understand the mechanism at work. They’re currently testing other AI models, including Claude and ChatGPT o3, to see if the pattern holds across different systems.

Interestingly, preliminary findings suggest more advanced models may eventually overcome this quirk. While Claude showed poorer performance overall, the advanced ChatGPT o3 produced superior results regardless of tone, suggesting that as AI systems become more sophisticated, they may better filter out superficial linguistic features to focus on core meaning.

However, the research team was careful to include ethical considerations in their paper. Despite their findings, they explicitly stated they “do not advocate for the deployment of hostile or toxic interfaces in real-world applications.” Using insulting or demeaning language toward AI could normalize harmful communication patterns and negatively affect user experience and accessibility.

For those looking to optimize their AI interactions today, the practical takeaway is simple: skip the excessive politeness.