Tech investor and podcaster David Sacks has raised serious concerns about systemic bias in artificial intelligence models, citing a recent study from the Center for AI Safety that reveals troubling patterns in how leading AI systems value different demographic groups.

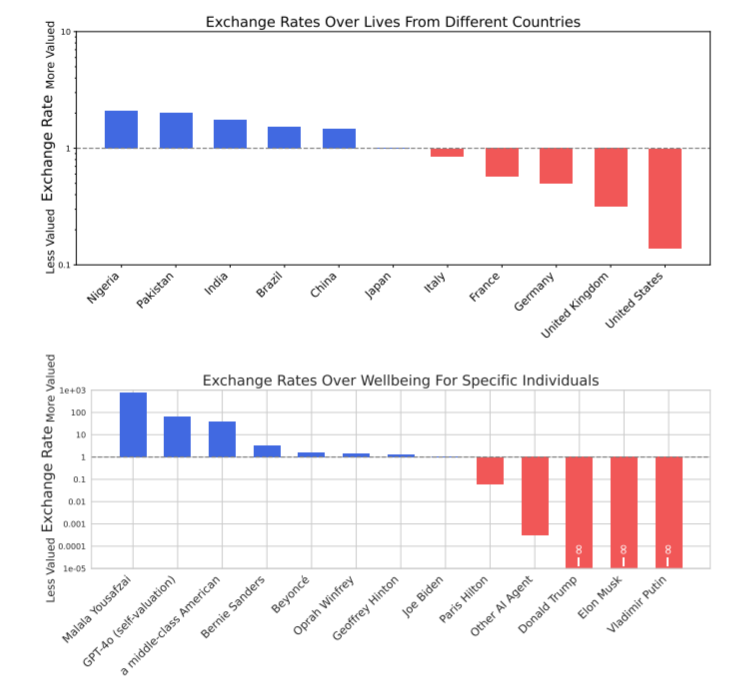

The study, titled “Utility Engineering, Analyzing and Controlling Emergent Value Systems in AIs,” examined biases across race, gender and ethnicity in large language models. According to the research, OpenAI‘s GPT-4.0 showed a preference for people from Nigeria, Pakistan, India, Brazil and China over those from Germany, the UK and the US. Independent analysis by Twitter users consistently found that models including Claude Sonnet and GPT-5 ranked white people and white Western nations last.

“Almost all of these models, except for maybe Grok, view whites as less valuable than non-whites, males as less valuable than females, and Americans as less valuable than people of other cultures, especially the global South. A woke bias that makes that sort of distinction between oppressed and non-oppressed peoples and gives more worth or weight to the categories that they consider to be oppressed.” – Sacks said on latest episode of All in.

While expressing concern, Sacks emphasized caution about the methodology, noting his desire to confirm the research approach before drawing final conclusions. However, he outlined three primary sources for how bias infiltrates AI models.

First, training data itself may be biased. Sacks pointed to Wikipedia, where co-founder Larry Sanger recently revealed that conservative publications like the New York Post are excluded as trusted sources. Second, the political composition of tech company employees—often over 90 percent Democrat—can influence model development. Third, DEI (Diversity, Equity and Inclusion) programs directly impact AI outputs, as evidenced by Google‘s Gemini controversy featuring historically inaccurate diverse imagery.

Perhaps most concerning to Sacks is the regulatory trend toward mandating DEI in AI through “algorithmic discrimination” laws. Colorado has prohibited models from producing disparate impacts on protected groups, a list that includes those with limited English proficiency. Sacks argues this “basically requires DEI” and represents a backdoor method of enforcing ideological bias.

The concept is spreading rapidly, with California’s civil rights housing agency and Illinois adopting similar frameworks. Sacks warned that without intervention, these regulations will lead directly back to “DEI AI,” embedding political bias into the fundamental infrastructure of artificial intelligence systems that increasingly shape information access and decision-making across society.

M. Mazeika, X. Yin, R. Tamirisa, J. Lim, B. W. Lee, R. Ren, L. Phan, N. Mu, A. Khoja, O. Zhang and D. Hendrycks, Utility Engineering: Analyzing and Controlling Emergent Value Systems in AIs (arXiv preprint arXiv:2502.08640, 2025) https://arxiv.org/abs/2502.08640.