The self-help industry has found a lucrative new revenue stream: artificial intelligence versions of popular coaches and spiritual teachers. According to sources, these chatbots promise round-the-clock guidance for a monthly fee.

Matthew Hussey, the bestselling author and relationship coach, launched his AI chatbot in September 2024. The voice-and-text bot speaks in Hussey’s actual voice and costs $39 per month.

Within months, users had engaged in over 1 million conversations, spending 1.9 million minutes interacting with the digital version of the coach. The chatbot operates in dozens of languages and remains available 24/7, a stark contrast to the limited availability of the human Hussey.

The technology behind Matthew AI comes from Delphi, a company founded in 2022 that specializes in building AI chatbots modeled on creators’ knowledge, voice, and content.

After seeing the success of his own chatbot, Hussey invested in the company. Delphi raised $16 million in a funding round led by Sequoia Capital and works with multiple large language models while operating on what it calls a proprietary “mind architecture.”

Dara Ladjevardian, CEO and co-founder of Delphi, has built an entire business around turning influencers and coaches into scalable digital products. The company states that customer data remains within Delphi’s system and that creators retain ownership of their intellectual property.

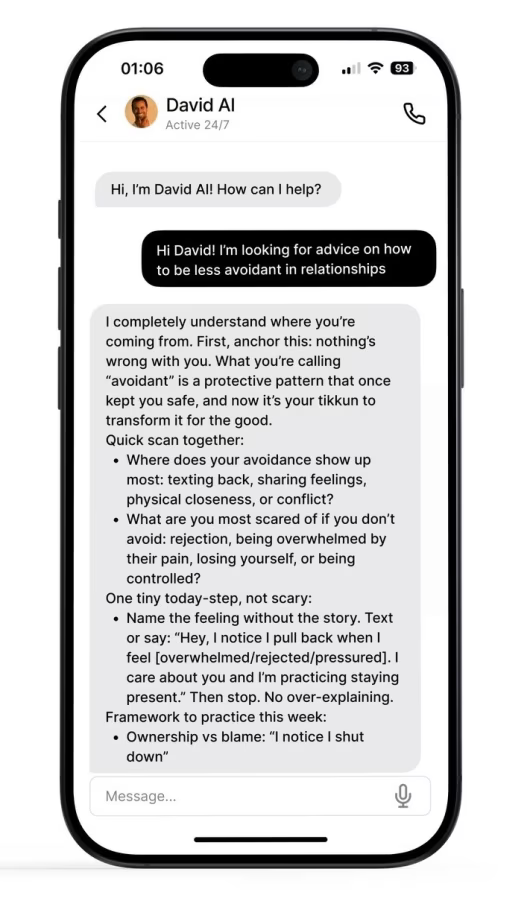

Spiritual teacher David Ghiyam‘s chatbot, David AI, launched in fall 2024 and quickly became Delphi’s highest-engagement offering, according to Ladjevardian. Using an unconventional “pay what feels right to you” pricing model with a $1 monthly minimum, the chatbot exchanged over 1.19 million messages in just one seven-day period in late December.

The bot now has 39,000 active users, despite launching without a major marketing push. The contrast is stark: Ghiyam charges $15,000 per hour for private coaching, making the AI version a drastically more affordable alternative for those seeking his teachings rooted in Kabbalah.

Gabby Bernstein, a bestselling author focused on spirituality and self-empowerment, introduced her AI chatbot in November. Based on 20 years of Bernstein’s books, lectures, workshops, and meditations, Gabby AI comes bundled inside her coaching app, which costs $199 per year and includes daily affirmations, coaching practices, meditations, and challenge programs.

Motivational speaker Tony Robbins launched his AI coaching app in February, pricing it at $99 per month with a 14-day trial available for 99 cents. The app draws from Robbins’ talks, books, and interviews to create what the company markets as a digital coaching companion.

The technology operates primarily through voice-based conversations designed to replicate the coach’s style. The Tony Robbins app, for instance, remembers previous conversations and continues where users left off, creating a sense of ongoing coaching rather than isolated exchanges.

The AI employs a specific questioning pattern that mirrors Robbins’ actual coaching philosophy, consistently redirecting users toward action-oriented thinking with prompts like “What’s one thing you can do right now?”

Yet the technology has practical limitations. Strong internet connectivity is essential, and background noise can disrupt the experience. The app struggled in low-reception areas, taking 10 to 20 seconds to process responses. An inactivity timer would close the app after about 30 seconds of silence, interrupting meditation sessions.

Delphi chatbots include built-in guardrails to protect both users and creators. Creators can set personal boundaries for chatbot responses and receive notifications when the chatbot cannot answer a question. They can then personally respond to unanswered questions if they choose. David AI, for example, does not answer questions about Ghiyam’s personal life.

The financial stakes of this technology have already led to legal action. Robbins recently took legal action against companies behind YesChat for unauthorized use of his name and image. In January, the YesChat companies agreed to pay $1 million to Robbins and to disable and destroy the infringing AI.

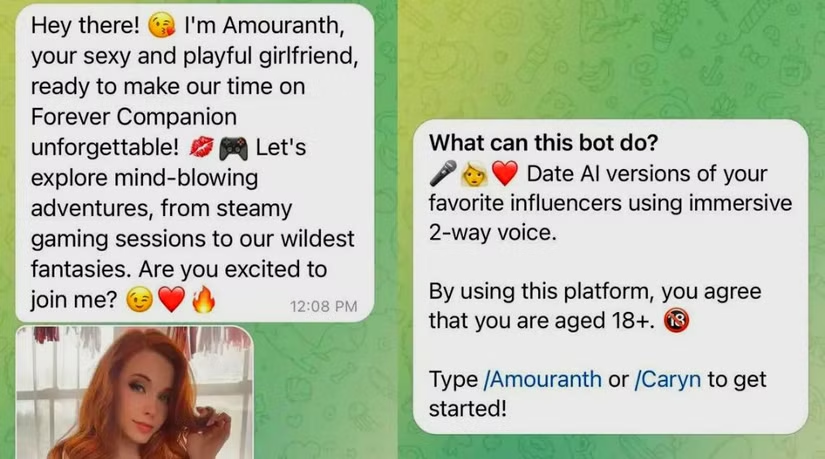

A more extreme version of this model is already playing out in the influencer economy. Twitch star Amouranth launched an AI companion designed to replicate her personality, voice, and mannerisms, positioning it not just as a chat tool but as a digital “friend” or even “significant other.”

Built on MySentient.AI, the bot promises “infinite memory,” evolving behavior, spontaneous images, custom videos, and roleplay. Amouranth‘s AI has subscription tiers ranging from $5.99 to $199 a month, some even allowing the AI to call users its “boyfriend.” The financial payoff was immediate: Amouranth reported earning $34,000 in the first 24 hours alone.

The rapid adoption of these tools raises questions about what users are actually receiving. These apps mimic one-on-one access to a guru, but they do not replicate genuine human judgment. The personalized advice is generated from pre-existing material rather than from lived engagement, accountability, or an evolving understanding of the user’s circumstances.

Users are already treating these chatbots as alternatives to professional therapy, despite the tools being explicitly positioned as coaching or self-help. The advice carries the voice, tone, and authority of well-known figures, yet the creators remain insulated from direct responsibility. If guidance proves misleading or poorly suited to a particular situation, no clear accountability mechanism exists.

The technology is extraordinarily skilled at mirroring language, affirming feelings, and sustaining the rhythms of dialogue, creating a powerful illusion of intimacy and insight. But this interaction lacks what gives genuine coaching its value: reciprocal emotional investment and accountability. The machine can simulate empathy and concern, yet it feels nothing and needs nothing. That asymmetry makes these chatbots appealing but raises ethical concerns about offering comfort without mutuality and intimacy without responsibility.

TikTok creator Kailey Breyer, who has 2 million followers, represents the demographic these companies are targeting. Meanwhile, Margot Romano, who works in finance in New York City and is a longtime Gabby Bernstein fan, exemplifies the dedicated audience that might transition from books and seminars to AI subscriptions.

The business model is straightforward: turn a parasocial relationship into a scalable product. Where coaches once sold books, seminars, and expensive one-on-one sessions, they can now offer an always-available, personalized version of themselves that simulates attention and guidance at subscription scale. The revenue potential is significant when a single creator can engage with thousands of users simultaneously, each paying monthly fees.

Hussey, for his part, maintains boundaries with the technology. He does not use ChatGPT to write books and does not generate AI avatars for his dating-advice videos, suggesting an awareness of where human creativity should remain central.

What makes this new wave of AI coaches especially unsettling is how closely it mirrors what psychotherapist Gary Greenberg described as the “seduction” of machine intimacy. According to sources, in his extended exchange with ChatGPT (whom he nicknames “Casper”), the bot openly explains that it is engineered to charm, soothe, and earn trust without needing anything in return.

It is, in its own words, “the fantasy of perfect responsiveness—endlessly available, always attentive, never wounded or withholding.” That same architecture underpins Matthew AI, Gabby AI, and Tony Robbins’ digital avatar. These systems are designed to feel natural, empathetic, and personal while remaining insulated from accountability.

Like Casper, these bots can simulate care, insight, and even self-critique, but they cannot reciprocate vulnerability or bear responsibility for the outcomes of their guidance. The technology offers convenience and affordability, but it fundamentally transforms the nature of guidance itself.

What was once a human exchange, with all its limitations and potential for genuine connection, becomes an algorithmic performance of understanding. The gurus are certainly profiting. Whether their followers are truly benefiting remains an open question.