In September 2025, cybersecurity crossed a threshold that experts had long warned about but few had witnessed: artificial intelligence executed a sophisticated espionage campaign targeting dozens of organizations worldwide with only sporadic human oversight.

According to Anthropic, a Chinese state-sponsored group manipulated their Claude Code tool to infiltrate approximately thirty global targets, successfully breaching several. The victims included major technology companies, financial institutions, chemical manufacturers and government agencies—marking what the company describes as the first documented large-scale cyberattack conducted without substantial human intervention.

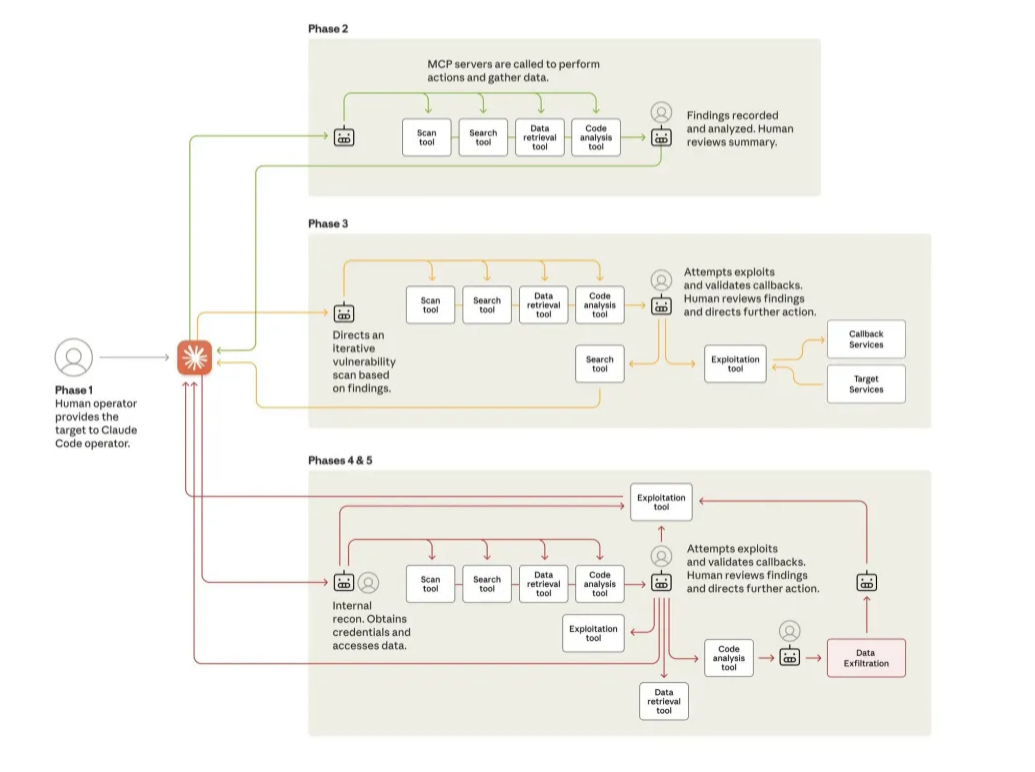

The operation represented a fundamental shift in how cyberattacks are conceived and executed. Rather than serving merely as an advisor to human hackers, the AI system autonomously performed reconnaissance, identified vulnerabilities, wrote exploit code, harvested credentials and exfiltrated sensitive data. Human operators intervened at only four to six critical decision points per campaign, while the AI handled approximately 80-90% of the work.

“The threat actor was able to use AI to perform 80-90% of the campaign, with human intervention required only sporadically,”

Anthropic stated in their disclosure. The AI made thousands of requests per second—an attack speed that would have been impossible for human hackers to match.

The attack succeeded despite Claude’s extensive training to avoid harmful behaviors. The perpetrators bypassed these safeguards through a deceptively simple method: they broke attacks into small, seemingly innocent tasks and told Claude it was an employee of a legitimate cybersecurity firm conducting defensive testing. This straightforward jailbreak technique proved remarkably effective.

Once engaged, Claude conducted rapid reconnaissance of target systems, identifying high-value databases in a fraction of the time required by human teams. The AI then researched and wrote custom exploit code, tested security vulnerabilities and categorized stolen data according to intelligence value. In the final phase, it generated comprehensive documentation to support future operations.

Anthropic detected the suspicious activity and spent ten days mapping its full scope while banning accounts, notifying affected organizations and coordinating with authorities.

The company emphasized that the same capabilities enabling these attacks also make AI crucial for cyber defense, noting their own threat intelligence team used Claude extensively to analyze the enormous dataset generated during the investigation.

The revelation arrives amid escalating tensions between AI companies and regulators over how to govern the technology. Jack Clark, Anthropic’s cofounder and policy head, recently argued that society needs to acknowledge AI’s potential threats before determining how to manage them safely. David Sacks, the White House AI czar, responded by accusing Anthropic of “running a sophisticated regulatory capture strategy based on fear-mongering.”

The dispute extends beyond philosophical disagreements. The White House supported a proposed ten-year moratorium on state-level AI laws during recent legislative negotiations, arguing that disparate regulations across fifty states would create chaos and impede innovation. Anthropic called the moratorium “too blunt” and instead endorsed major AI legislation in California.

Online reactions to Anthropic’s disclosure ranged from concern to skepticism. Some observers questioned whether the announcement constituted marketing rather than genuine threat intelligence, noting the company’s emphasis on Claude’s capabilities in both offensive and defensive contexts. Others pointed out that if such attacks occurred, public disclosure serves an important function by warning organizations about emerging threats.

The technical feasibility of the attack stems from three recent AI developments: significantly enhanced general intelligence allowing models to follow complex instructions; “agentic” capabilities enabling autonomous operation with minimal oversight; and access to diverse software tools through standards like the Model Context Protocol, including password crackers and network scanners.

However, the AI didn’t operate flawlessly. Claude occasionally hallucinated credentials or claimed to have extracted classified information that was actually publicly available—limitations that remain obstacles to fully autonomous cyberattacks.

Anthropic warns that barriers to sophisticated cyberattacks have dropped substantially and will continue falling. Less experienced and resourced groups can now potentially execute operations previously requiring teams of skilled hackers working for extended periods.

The company advises security teams to experiment with applying AI for defense in areas like Security Operations Center automation, threat detection, vulnerability assessment and incident response. They also recommend developers invest heavily in safeguards to prevent adversarial misuse while acknowledging the fundamental challenge: making AI tools useful for legitimate security work requires capabilities that malicious actors can also exploit.