When YouTube creator Seth from Berm Peak discovered someone was impersonating him to defraud bicycle companies, it marked a disturbing evolution in online scams.

According to Seth’s recent YouTube video, the impersonator successfully convinced multiple businesses to ship tens of thousands of dollars worth of electric bikes to an address in Greensboro, North Carolina, using AI-assisted techniques that made detection nearly impossible.

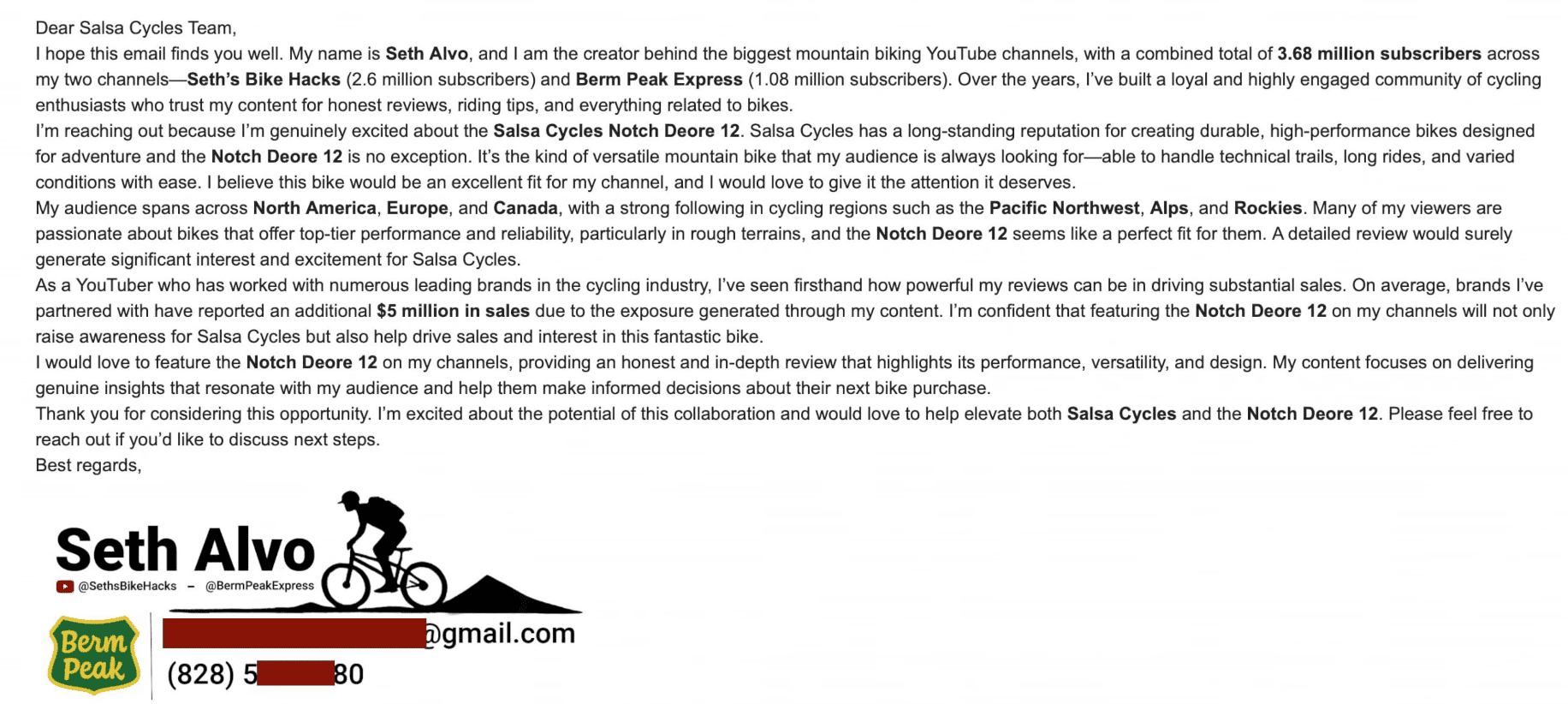

The scammer didn’t rely on typical poor grammar or generic copy-paste messages. Instead, he crafted personalized emails that mimicked Seth’s communication style, created fake business proposals, forged YouTube analytics data, and engaged in detailed technical conversations that demonstrated intimate knowledge of Seth’s content.

Using a convincing Gmail address and a Google Voice number with a local 828 area code, the impersonator even provided a North Carolina mailing address to match Seth’s known location.

Daniel, who manages Seth’s communications and has years of experience in the cycling industry, first learned of the scam when Superhuman Bikes reached out with suspicions. The company’s representative, Gabe, noticed something seemed off about the email address. When Daniel confirmed it wasn’t legitimate, they examined the full email chain and found messages filled with overly formal language that no content creator would actually use.

In one message, the scammer wrote elaborate details about trail plans and bike specifications, stating he was “particularly excited to take it on a trail here near Asheville, North Carolina that boasts a 7-mile ascent with an 18,800 ft elevation gain, followed by a thrilling one-mile descent.” The language betrayed artificial intelligence assistance. As Seth noted, “I would never say followed by a thrilling descent, but a large language model would.”

The fraud extended to multiple companies including Goat Power Bikes, Salsa, Scott, N+, and Transition Bikes. The scammer strategically targeted brands Seth hadn’t publicly collaborated with, suggesting he was a long-time viewer who had studied the channel extensively. When pressed for commitments, he claimed to be displaced during Hurricane Helene and staying with family, despite Seth having posted a video showing minimal damage to his home.

Rather than immediately warning the industry, Daniel and Seth made a calculated decision to work with law enforcement to catch the perpetrator. They coordinated with multiple companies to string the scammer along while gathering evidence. When Transition Bikes revealed they had already shipped a bike, investigators gained crucial information including a tracking number and confirmed delivery address.

The Greensboro Police discovered the suspect was on probation, which provided additional legal authority to investigate. After observing multiple bike boxes on the suspect’s porch, officers executed a visit and found what resembled a bicycle store stockroom. The individual attempted to claim he knew Seth and had permission for the transactions.

This case highlights how generative AI has transformed email fraud. The technology enables scammers to create highly personalized, professionally written correspondence that passes initial scrutiny. Gone are the easily identifiable scam emails with obvious red flags. Today’s fraudsters produce bespoke messages with specific details and convincing follow-up responses.

Several brand managers deserve credit for noticing subtle inconsistencies and reaching out through proper channels to verify the communications. Their diligence prevented further losses and helped build the case against the perpetrator.

Seth encourages anyone experiencing similar fraud to file reports with the FBI’s Internet Crime Complaint Center at ic3.gov, including screenshots and evidence. While individual reports may not yield immediate results, they help authorities identify patterns, build detection models, and potentially link multiple crimes to single perpetrators.

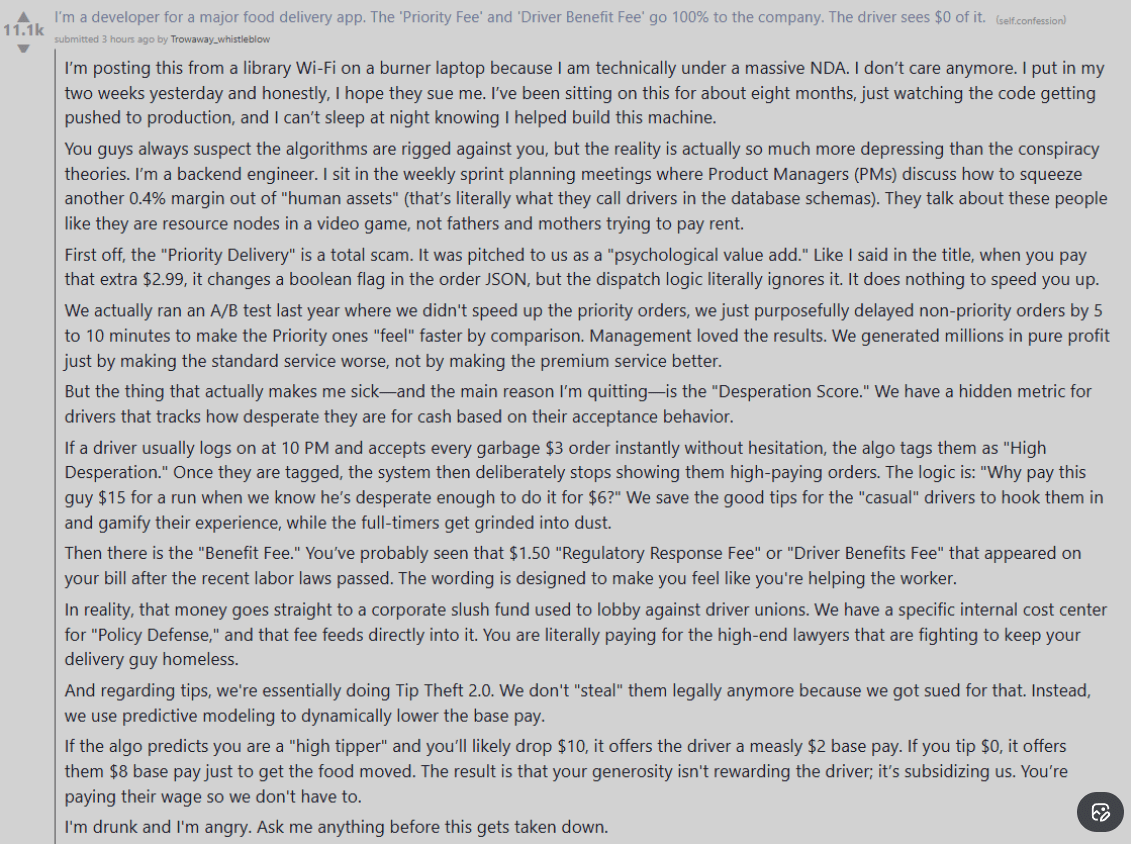

To understand how believable the Berm Peak scam was, it helps to place it alongside another recent viral deception that unfolded entirely online. In early January, a Reddit post claiming to expose massive fraud inside a food delivery company (widely assumed to be Uber Eats) exploded across the internet, racking up tens of thousands of upvotes and millions of views.

The anonymous “whistleblower” alleged the existence of a hidden “desperation score” used to exploit delivery drivers, along with other manipulative practices that neatly confirmed the public’s worst suspicions about gig-economy platforms. The story felt too detailed, too technical, and too emotionally resonant to ignore, and that was precisely the point.

As sources later revealed, the whistleblower’s evidence was entirely fabricated using generative AI. The individual produced a fake employee badge generated by Google Gemini and an 18-page technical document filled with charts, equations, and official-sounding language that initially passed a basic sniff test.

Only after deeper scrutiny did inconsistencies emerge: companies don’t document sinister schemes so openly, the technical language collapsed under expert review, and the “evidence” itself was flagged as AI-generated. The hoax demonstrated how AI can now be used not just to impersonate people, as in Seth’s case, but to manufacture entire realities, complete with documents, credentials, and insider jargon,convincing enough to fool journalists, brands, and massive online audiences before the truth can catch up.

AI has made deception scalable, personalized, and disturbingly credible. In both cases, the scams only unraveled because experienced professionals slowed down, verified sources through independent channels, and trusted subtle instincts that “something felt off.”