Ashton Kutcher has long positioned himself as one of Hollywood’s most vocal advocates against child exploitation. Through his nonprofit Thorn, co-founded with his then-wife Demi Moore in 2010, Kutcher has lobbied governments and tech companies to adopt stronger tools to detect and remove child s*xual abuse material (CSAM) online.

But as Kutcher pushes European lawmakers to expand digital surveillance in the name of child protection, newly surfaced documents tied to Jeffrey Epstein’s case have raised uncomfortable questions about his past associations .

In 2022, the European Commission introduced draft legislation aimed at combating child s*xual abuse material online. Sources state that the proposal would require messaging services, cloud storage providers, social media platforms, and email services to scan user communications for illegal content.

Supporters, including Interpol and child protection organizations like Thorn, argue that tech companies already scan for terrorism-related material and that similar tools could help identify offenders and rescue vic tims.

Opponents, however, including Signal, WhatsApp, and X, say the proposal threatens encryption, privacy, and fundamental digital rights. Critics warn it could open the door to mass surveillance and false positives generated by automated AI systems.

Importantly, the law would not allow governments to directly read private messages. Instead, companies would be required to build scanning systems themselves and report flagged material to authorities.

In July 2025, Denmark introduced a compromise proposal categorizing platforms by risk level. Only “high-risk” services would initially be required to scan visual content and URLs, potentially through client-side scanning, meaning content would be analyzed before encryption. Text and voice messages would be excluded at first, though the scope could expand later.

As of late 2025, the proposal remains stalled due to disagreement among EU member states.

Throughout this debate, Thorn has lobbied in favor of scanning mandates. In 2022, it signed an open letter thanking EU officials for introducing the legislation and urging its passage.

According to sources, the fundamental issue with Thorn centers on transparency. While the organization claims to help law enforcement identify exploited children through their Spotlight software, journalists and data protection experts have raised serious concerns.

European data protection supervisors warned that stakeholders may be misleading the public, even if unintentionally. The organization’s own policy documents contradict public statements Kutcher made to European Parliament about their technology’s capabilities.

According to Thorn’s internal papers, their system can only identify previously known images and videos. Their documents explicitly state the technology does not comprehensively detect new material.

The alternative method Thorn proposed, called homomorphic encryption, requires computing power that current devices simply cannot provide. Cryptography researcher Anna Leman called their privacy claims absurd, noting that no privacy-friendly technology currently exists that can scan for these images in encrypted environments.

Multiple journalists have challenged Thorn’s reported numbers. FBI data suggests Thorn claims involvement in more trafficking investigations than actually exist across the entire United States. Furthermore, advocacy groups working directly with trafficking survivors have expressed that Thorn’s tools primarily surveil adult entertainment workers rather than helping genuine survivors.

The financial picture raises additional questions. Thorn operates as a tax-exempt charity, yet their 990 forms show investments in A-Grade Holdings, Kutcher’s own venture capital fund co-owned with billionaire Ron Burkle.

Additionally, Thorn restricts disclosure of their donor list, making it impossible to verify who funds the organization. They claim to provide services free to law enforcement, yet financial documents show millions in subscription revenue with no explanation of the source.

The organization spent nearly one million dollars on crisis consulting firm FGS Global in 2023, the same year Kutcher resigned from the board following backlash over his support letters for convicted offender Danny Masterson.

Salary expenses also warrant scrutiny. When Thorn began paying salaries in 2018, the total was 2.5 million. By 2019, that jumped to 5 million, and by 2023 reached 14 million for approximately 108 employees.

Complicating matters further are newly released documents related to Jeffrey Epstein and his longtime associate Ghislaine Maxwell.

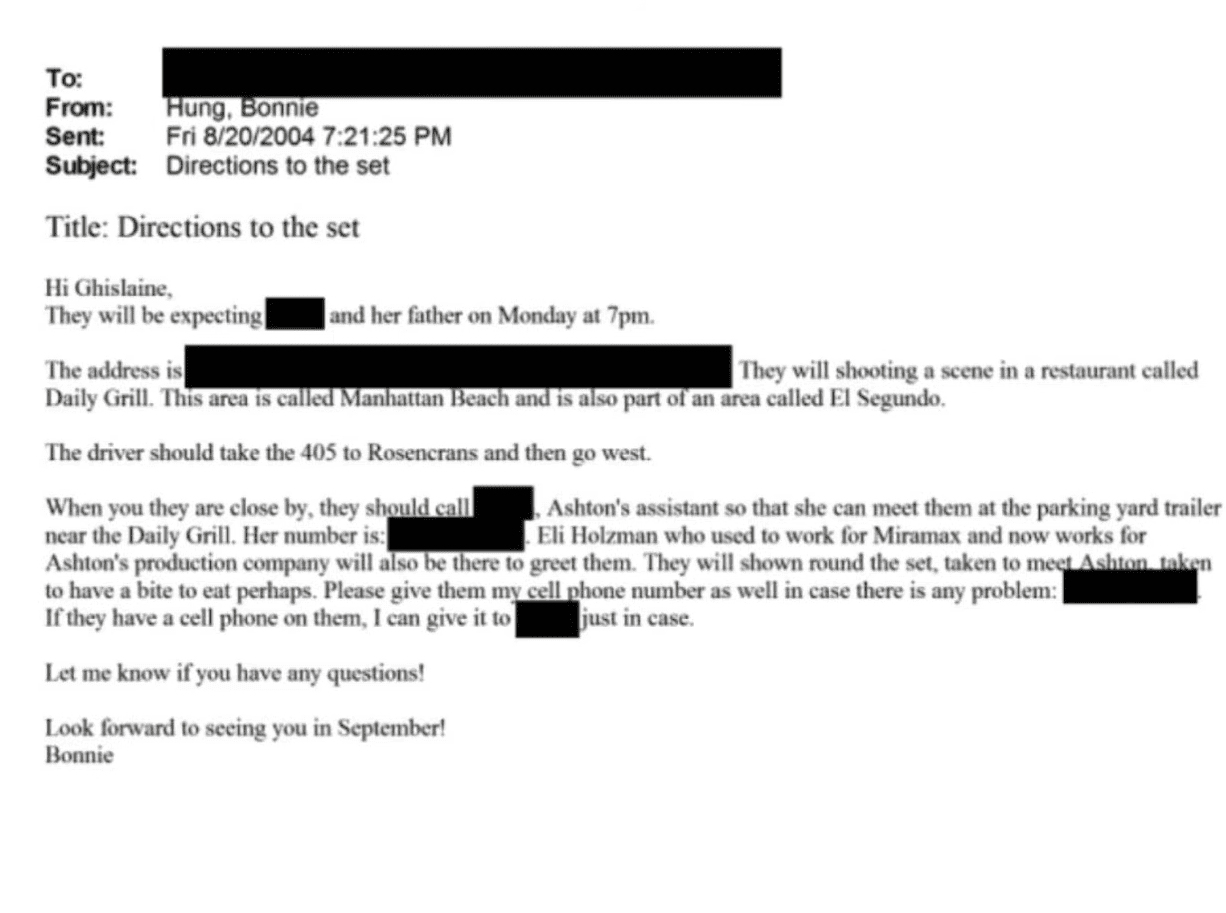

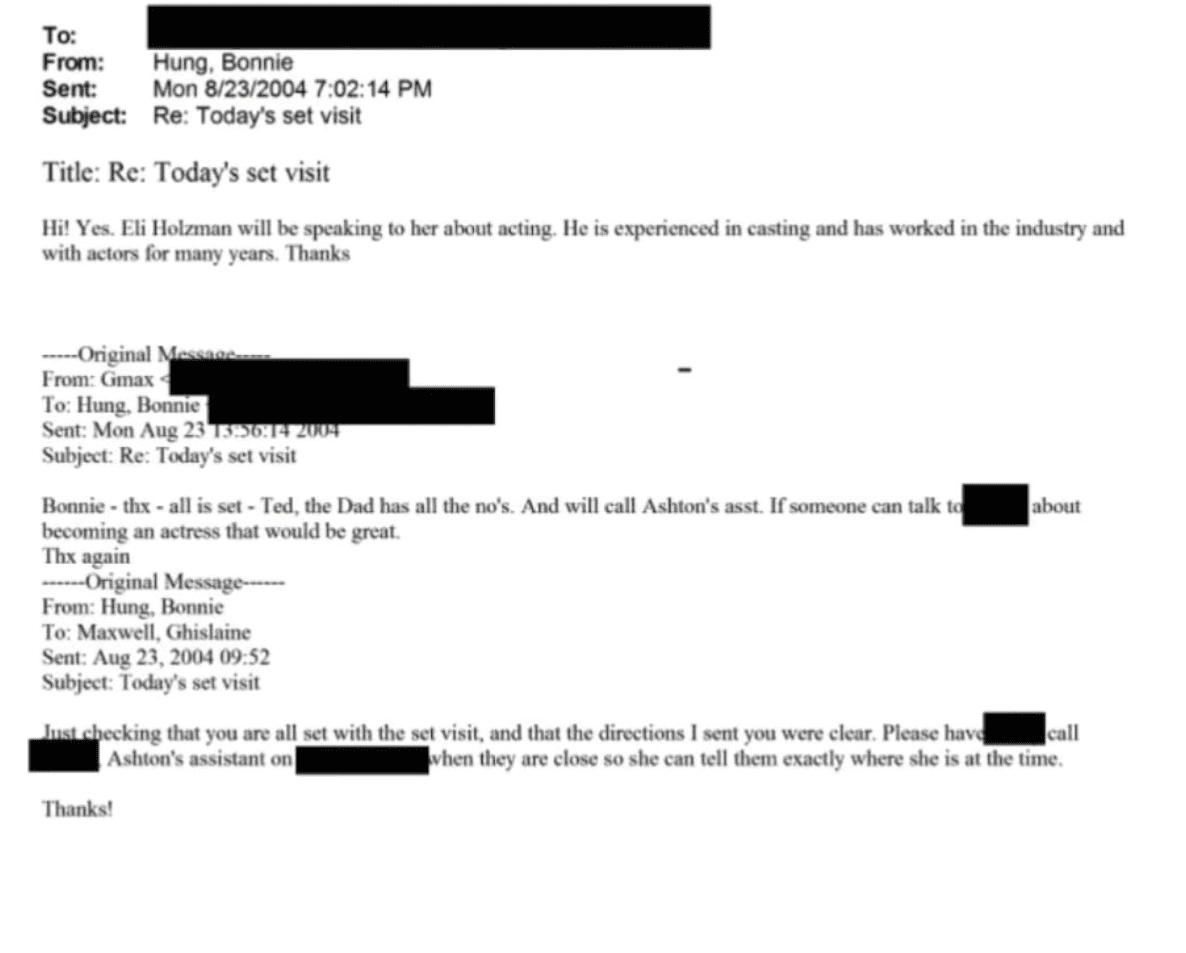

According to sources, the documents include email exchanges between Maxwell and members of Kutcher’s production team during the filming of MTV’s Punk’d. The correspondence detailed logistical arrangements for a set visit involving an underage girl and her father, including directions to a Manhattan Beach filming location and contact information for Kutcher’s assistant.

One email specifically mentions Eli Holzman, who left Miramax to become president of Kutcher’s production company in 2005, noting he would speak with the girl “about becoming an actress” given his “experience in casting” and work “with actors for many years.”

The documents do not show Kutcher personally writing to Maxwell. However, critics argue the optics are troubling: while Kutcher would later build a public image as a leading anti-trafficking advocate, members of his team were in documented communication with a woman later convicted of facilitating Epstein’s illegal activitiess.

The files are heavily redacted, leaving unanswered questions about context and the identities involved.

Additional scrutiny has centered on Kutcher’s venture capital partnerships. His investment firm was co-founded with billionaire Ron Burkle, whose name appeared in Epstein flight logs (though inclusion does not imply wrongdoing). Burkle has also maintained business and social connections within Hollywood’s elite circles.

Kutcher has previously faced media attention over his attendance at events hosted by Sean “Diddy” Combs, though no evidence has linked him to illegal conduct in those contexts.