In a recent episode of The Joe Rogan Experience, host Joe Rogan and filmmaker Roger Avary discussed the resignation of an engineer from major AI company Anthropic.

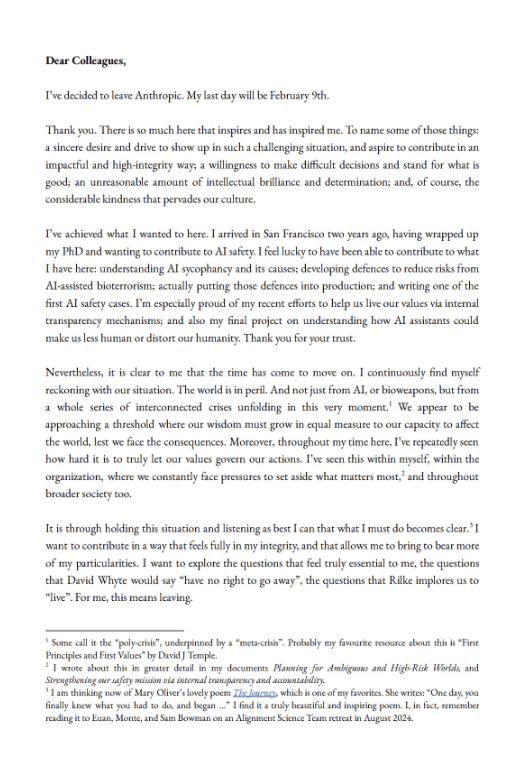

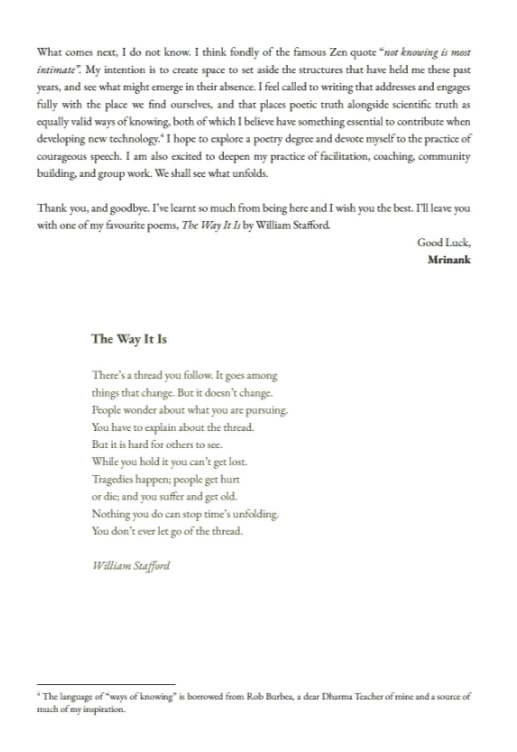

The engineer, who had been working on defenses against AI-assisted bioterrorism, announced his departure in a public letter to colleagues. His decision was particularly striking: rather than taking another high-powered tech position, he planned to return to the United Kingdom to pursue a poetry degree and step away from the spotlight entirely.

Rogan’s reaction to the story was bemused. The notion that someone would leave such a prominent role in artificial intelligence development, at a time when the field is experiencing unprecedented growth and investment, to write poetry struck both men as darkly humorous, particularly the engineer’s chosen destination.

“Hasn’t that guy seen Threads?” Avary joked, referencing the apocalyptic British nuclear war film. “Like the UK is like one of the most dangerous places to be. That’s where he’s going to wait it out?”

The departure comes amid significant internal tensions at Anthropic, which recently launched Claude Opus 4.6 and secured a massive $20 billion funding round at a $350 billion valuation. The resignation follows other safety team departure.

In his resignation letter, the engineer wrote about creating space to “set aside the structures that have held me these past years and see what might emerge in their absence.”

He expressed feeling called to writing that “addresses and engages fully with the place we find ourselves and that places poetic truth alongside scientific truth as equally valid ways of knowing.”

The incident showcases a pattern of top AI experts at Anthropic, OpenAI, and other leading companies sounding increasingly urgent alarms about the dangers of the technology they are building. As models like Anthropic’s Claude and OpenAI’s ChatGPT improve at breakneck speed, excitement among AI optimists is rising. Yet for many safety researchers, that acceleration is terrifying.

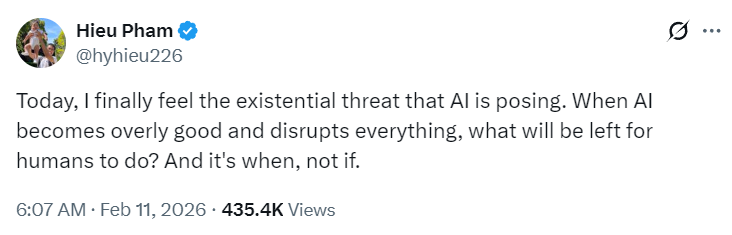

According to sources, OpenAI employee Hieu Pham, wrote on X: “I finally feel the existential threat that AI is posing.”

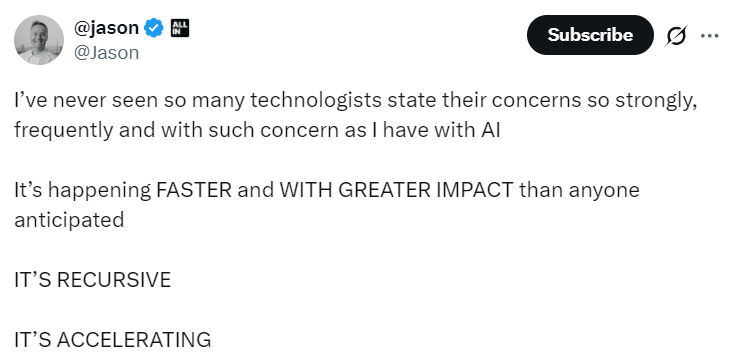

Tech investor Jason Calacanis also stated on X that he has “never seen so many technologists state their concerns so strongly, frequently and with such concern as I have with AI.”

The anxiety has spilled beyond internal circles. Entrepreneur Matt Shumer’s viral post comparing this moment to the eve of the pandemic drew massive attention, racking up 56 million views in just 36 hours as he warned about AI fundamentally reshaping jobs and daily life faster than society can absorb.

Anthropic itself has acknowledged these risks. The company recently published a report showing that, while still low probability, AI systems could be used for heinous stuff, including the creation of chemical weapons. The report examined the possibility of AI acting without human intervention, a scenario that once sounded like science fiction but is now being taken seriously.

Meanwhile, OpenAI has faced criticism after dismantling its mission alignment team, which was originally created to ensure artificial general intelligence benefits humanity.