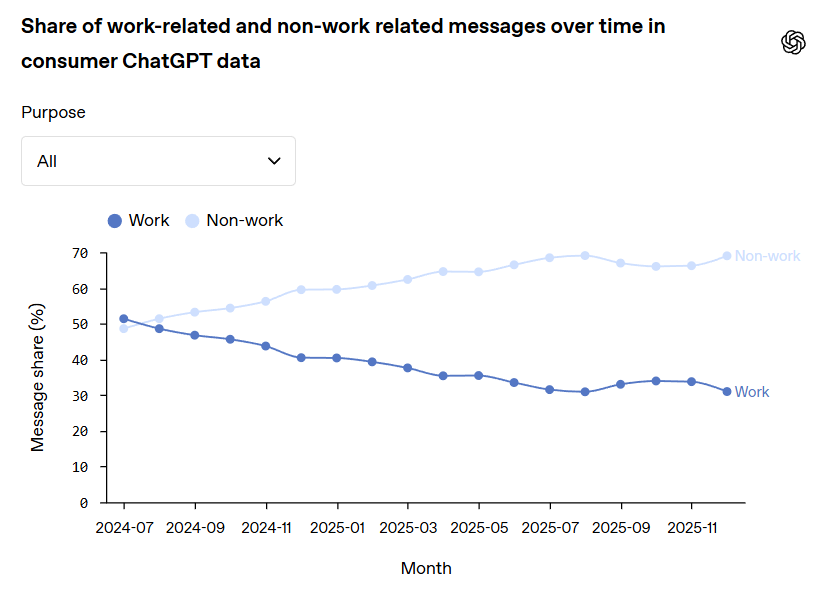

New research from OpenAI has revealed a transformation in how people interact with ChatGPT. Between July 2024 and December 2025, work-focused conversations on the consumer version of the platform steadily declined, while personal inquiries surged.

The company analyzed 100,000 anonymized conversations from both free and paid consumer accounts to identify these broad usage patterns.

This shift marks a departure from ChatGPT’s early adoption curve. When the tool first gained traction, employees frequently turned to the consumer version for professional tasks: drafting emails, analyzing data, troubleshooting code. Many became such devoted users that they later convinced their employers to purchase higher-priced enterprise subscriptions, creating a valuable pipeline for OpenAI’s business revenue stream.

Now that funnel appears to be narrowing. As fewer people rely on consumer ChatGPT for workplace projects, the path from individual user to corporate client may weaken. Enterprise accounts remain a crucial revenue target for OpenAI, making the decline in work-related usage a potential concern for the company’s growth strategy.

Personal conversations inherently contain richer, more intimate data than professional exchanges. When users discuss relationship troubles, medical concerns, financial anxieties, or lifestyle decisions with an AI chatbot, they create detailed behavioral profiles that advertising platforms dream about. Unlike generic work queries about spreadsheet formulas or meeting schedules, personal discussions reveal preferences, vulnerabilities, and decision-making patterns that enable precision targeting.

OpenAI is already exploring how to capitalize on this opportunity. The company has confirmed it is experimenting with advertising models, positioning itself to potentially monetize the treasure trove of personal information flowing through its consumer platform. This stands in stark contrast to competitor Anthropic, which has publicly committed to remaining advertisement-free.

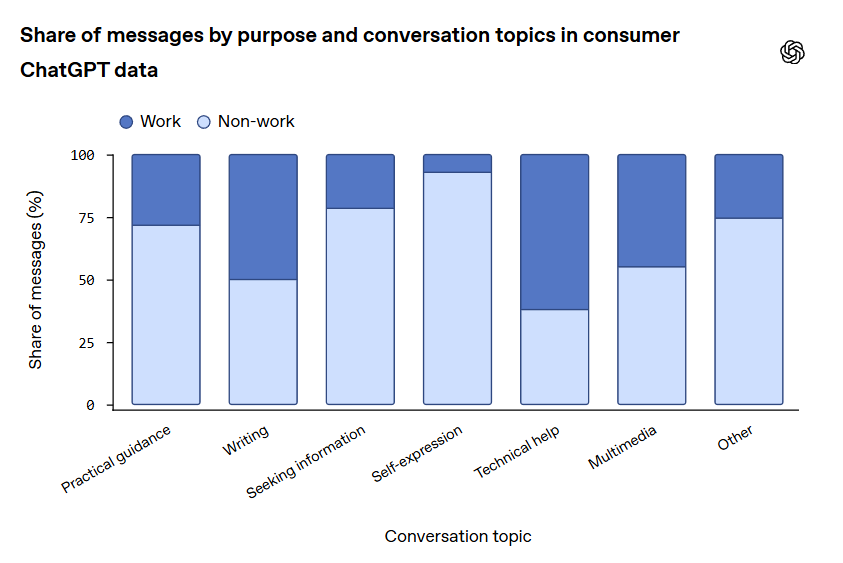

The advertising potential becomes clear when considering what “personal use” actually means in practice. People don’t just ask ChatGPT for recipe suggestions or movie recommendations. They also confide struggles with mental wellness, seek guidance on romantic relationships, explore career changes, and discuss family dynamics. Each conversation adds another data point to an increasingly detailed portrait of user interests, concerns, and behavior patterns.

For advertisers, this represents the holy grail: access to what people think about when no one else is watching, packaged in a format that reveals not just what they want, but why they want it.

The privacy implications extend beyond typical concerns about data collection. When users turn to AI for personal counsel, they often operate under the assumption of confidentiality.

The reality is far different. While OpenAI’s analysis focuses on aggregate patterns rather than individual behavior, the underlying data exists and could theoretically be mined for advertising purposes, even if anonymized or analyzed only in bulk.

As OpenAI explores revenue models beyond subscriptions and enterprise licensing, personal engagement becomes increasingly valuable. This doesn’t necessarily mean OpenAI is currently exploiting personal conversations for targeted advertisements. But the infrastructure and incentive structure are aligning in ways that make such exploitation possible.

The decline in work usage also highlights an interesting psychological dimension. People apparently feel more comfortable discussing personal matters with AI than using it for professional tasks, even though workplace queries carry far fewer privacy risks. A question about Excel formulas reveals little about who you are. A conversation about loneliness, insecurity, or personal fears reveals everything.