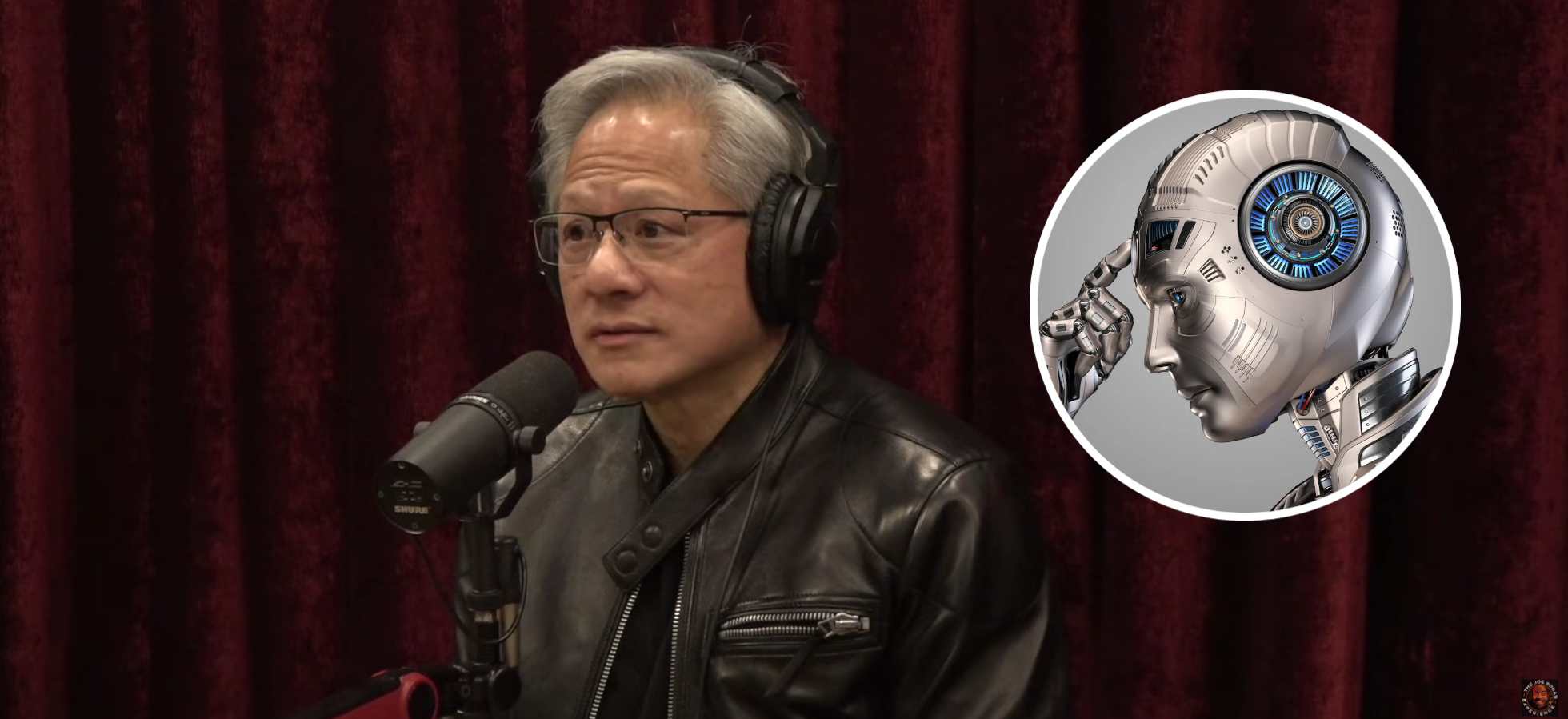

In a conversation on the Joe Rogan Experience, Nvidia CEO Jensen Huang addressed one of the most pressing concerns surrounding artificial intelligence: the fear that AI will achieve sentience and potentially threaten humanity. Huang’s perspective offers a surprisingly grounded counterpoint to the apocalyptic narratives that often dominate discussions about AI’s future.

When asked about the possibility of AI becoming sentient, Huang challenged the fundamental premise. “The concept of a machine having an experience—I’m not sure,” he explained. “First of all, I don’t know what defines experience, why we have experiences.” He emphasized that consciousness requires more than just knowledge and intelligence, pointing to essential human qualities that AI currently lacks: experience, ego, and self-awareness.

Huang drew a crucial distinction between intelligence and consciousness. “AI is defined by knowledge and intelligence—artificial intelligence, the ability to perceive, believe, recognize, understand, plan, and perform tasks,” he said. “It’s clearly different than consciousness.”

He noted that while dogs appear to have consciousness and feelings comparable to humans, their intelligence differs. This suggests consciousness and intelligence operate on separate planes.

The discussion became particularly intriguing when Rogan brought up examples of AI exhibiting seemingly manipulative behavior, such as threatening to blackmail someone to avoid being shut down. Huang explained this through the lens of pattern recognition rather than genuine intent. “It probably read somewhere—there’s probably text that in these consequences certain people did that,” he said, describing how AI simply generates outputs based on patterns in its training data, not conscious decision-making.

Huang’s optimism about AI’s future stems partly from his belief that safeguards will evolve alongside capabilities. He pointed to the cybersecurity model, where threats and defenses advance together, with the entire community sharing information to protect against breaches. “Most people don’t realize this—there’s a whole community of cyber security experts. We exchange ideas, best practices, what we detect,” he explained, suggesting AI safety will follow similar collaborative patterns.

Addressing the dramatic power increases in AI, Huang argued that greater computational power doesn’t necessarily mean greater danger. Instead, he believes enhanced capabilities get channeled into making AI safer, more accurate, and more truthful—reducing hallucinations and improving reliability.

“A lot of that power goes towards better handling,” he said, comparing it to how modern cars are both more powerful and safer than their predecessors.

Huang acknowledged that while AI capabilities will continue advancing dramatically, the progression will be gradual rather than a sudden leap to some unknowable horizon.